服务热线

135-6963-3175

小试牛刀

准备编排文件tomcat.yaml:(包含两部分,副本rc和service配置可为两个文件,不过我们此处合并为一个)

#rc副本相关 apiVersion: extensions/v1beta1 #表示Deployment调度配置 kind: Deployment metadata: #调度对象的全称,全局唯一 name: myweb spec: #pod副本数量 replicas: 2 #根据此模版创建pod实例 template: metadata: labels: #pod拥有的标签,对应RC的selector app: myweb spec: containers: - name: myweb image: docker.io/tomcat:8.5-jre8 ports: #tomcat容器的端口 - containerPort: 8080 #service相关,三个-表示区分两个配置 --- apiVersion: v1 kind: Service metadata: name: myweb spec: ports: - name: myweb-svc port: 8099 targetPort: 8080 #浏览器访问此服务的端口 nodePort: 31111 selector: app: myweb #表示这个服务是一个node节点的端口 type: NodePort

使用命令创建:

kubectl create -f tomcat01.yaml

若配置错了,创建了错误的service和pod可通过下面命令进行删除

kubectl delete -f tomcat01.yaml

查看pod是否创建成功并运行

kubectl get pods -o wide

[root@localhost k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-209501739-drdkc 0/1 ContainerCreating 0 40s

myweb-209501739-v26g9 0/1 ContainerCreating 0 40s

#发现处于ContainerCreating并没有成功,我们执行下面命令查看原因

[root@localhost k8s]# kubectl describe pods myweb-209501739-drdkc

Name: myweb-209501739-drdkc

Namespace: default

Node: 192.168.1.103/192.168.1.103

Start Time: Sun, 13 Sep 2020 12:34:49 +0800

Labels: app=myweb

pod-template-hash=209501739

Status: Pending

IP:

Controllers: ReplicaSet/myweb-209501739

Containers:

myweb:

Container ID:

Image: docker.io/tomcat:8.5-jre8

Image ID:

Port: 8080/TCP

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

51s 51s 1 {default-scheduler } Normal Scheduled Successfully assigned myweb-209501739-drdkc to 192.168.1.103

<invalid> <invalid> 1 {kubelet 192.168.1.103} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

1s <invalid> 3 {kubelet 192.168.1.103} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"发现报了一个redhat-ca.crt: no such file or directory没找到文件的错误

此文件应该是用来连接主机master作凭证的吧,接下来下载这个凭证文件就是了。

在node节点机192.168.1.103上,执行以下命令: 1、wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm 2、rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem 3、docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

此时再查看pod状态

[root@localhost k8s]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myweb-209501739-drdkc 0/1 ContainerCreating 0 11m <none> 192.168.1.103 myweb-209501739-v26g9 1/1 Running 0 11m 172.17.0.2 192.168.1.103

发现正在调度,第二个已经成功了,再等待...再看....

[root@localhost k8s]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myweb-209501739-drdkc 1/1 Running 0 12m 172.17.0.3 192.168.1.103 myweb-209501739-v26g9 1/1 Running 0 12m 172.17.0.2 192.168.1.103

发现两个已经启动成功。并且被分配到了103节点上

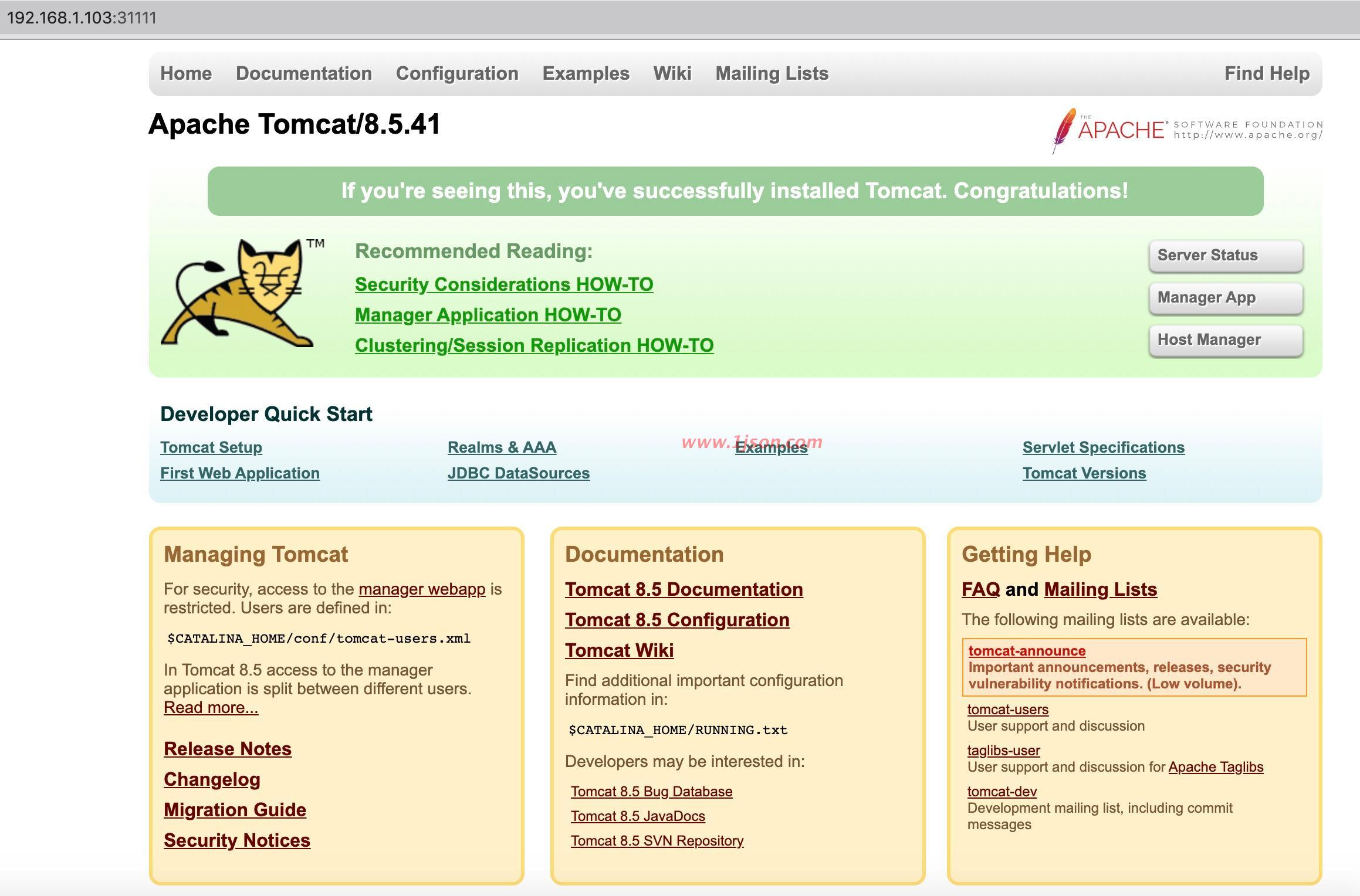

此时我们通过浏览器访问:

发现已经可以正常访问。

⚠️注意:若出现404可能是tomcat镜像原因。若出现不能访问请检查防火墙iptables是否关闭。

我们在103上查看进程

[root@localhost ~]# ps -ef|grep tomcat root 49665 49649 0 12:46 ? 00:00:02 /usr/local/openjdk-11/bin/java -Djava.util.logging.config.file=/usr/local/tomcat/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -Djdk.tls.ephemeralDHKeySize=2048 -Djava.protocol.handler.pkgs=org.apache.catalina.webresources -Dorg.apache.catalina.security.SecurityListener.UMASK=0027 -Dignore.endorsed.dirs= -classpath /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar -Dcatalina.base=/usr/local/tomcat -Dcatalina.home=/usr/local/tomcat -Djava.io.tmpdir=/usr/local/tomcat/temp org.apache.catalina.startup.Bootstrap start root 49912 49897 0 12:46 ? 00:00:02 /usr/local/openjdk-11/bin/java -Djava.util.logging.config.file=/usr/local/tomcat/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -Djdk.tls.ephemeralDHKeySize=2048 -Djava.protocol.handler.pkgs=org.apache.catalina.webresources -Dorg.apache.catalina.security.SecurityListener.UMASK=0027 -Dignore.endorsed.dirs= -classpath /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar -Dcatalina.base=/usr/local/tomcat -Dcatalina.home=/usr/local/tomcat -Djava.io.tmpdir=/usr/local/tomcat/temp org.apache.catalina.startup.Bootstrap start root 54387 47603 0 12:53 pts/0 00:00:00 grep --color=auto tomcat

然后测试下在103上kill掉进程会不会重新创建

[root@localhost ~]# kill -9 49665 [root@localhost ~]# kill -9 49912 [root@localhost ~]# ps -ef|grep tomcat root 56453 47603 0 12:56 pts/0 00:00:00 grep --color=auto tomcat

...等待

[root@localhost ~]# ps -ef|grep tomcat root 56584 56567 36 12:57 ? 00:00:01 /usr/local/openjdk-11/bin/java -Djava.util.logging.config.file=/usr/local/tomcat/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -Djdk.tls.ephemeralDHKeySize=2048 -Djava.protocol.handler.pkgs=org.apache.catalina.webresources -Dorg.apache.catalina.security.SecurityListener.UMASK=0027 -Dignore.endorsed.dirs= -classpath /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar -Dcatalina.base=/usr/local/tomcat -Dcatalina.home=/usr/local/tomcat -Djava.io.tmpdir=/usr/local/tomcat/temp org.apache.catalina.startup.Bootstrap start root 56703 47603 0 12:57 pts/0 00:00:00 grep --color=auto tomcat

在102 master上查看

[root@localhost k8s]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myweb-209501739-drdkc 0/1 Error 0 22m 172.17.0.3 192.168.1.103 myweb-209501739-v26g9 0/1 Error 0 22m 172.17.0.2 192.168.1.103 [root@localhost k8s]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myweb-209501739-drdkc 0/1 Error 0 22m 172.17.0.3 192.168.1.103 myweb-209501739-v26g9 1/1 Running 1 22m 172.17.0.2 192.168.1.103 [root@localhost k8s]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myweb-209501739-drdkc 1/1 Running 1 22m 172.17.0.3 192.168.1.103 myweb-209501739-v26g9 1/1 Running 1 22m 172.17.0.2 192.168.1.103

发现刚开始两个都Error状态,后面又都恢复成Running状态,说明k8s又自动创建了pod维持了我们指定的2个的副本数量。